Jonathan Held, James E. Malackowski, Katie Twomey, Mike Gaudet, Christopher Herron, Brooks Armstrong, Theresa Chimento, Timothy Gillihan, Micah Pilgrim, Christopher Wilkens, Colin Young, William Zoeller | JS Held

The Brief

Legal advisors should read this article to:

- Understand emerging litigation risks and regulatory trends

- Assess the importance of explainability and human oversight in AI systems

- Prepare for ethical governance and professional duty challenges

Insurance advisors should read this article to:

- Gain insight into AI’s impact on claims efficiency and risk

- Stay ahead of regulatory and compliance change

- Strengthen ethical leadership and market trust

Executive Summary

As artificial intelligence (AI) reshapes the insurance landscape, insurers face a growing tension between automation and accountability. While AI offers unprecedented speed and efficiency in claims processing, it also introduces new risks, ranging from litigation over biased algorithms to regulatory scrutiny of exaggerated AI capabilities.

This article draws upon J.S. Held’s integrated capabilities across property and casualty claims consulting, litigation support, and ethical AI governance, and examines the need for insurers and insureds to manage the risks of a transforming liability landscape. It emphasizes the importance of explainable systems, ethical oversight, and resilient governance frameworks, which are particularly crucial for legal professionals who face new litigation risks and regulatory trends. By doing so, it helps clients capitalize on opportunities and address the risks associated with AI in claims management and resolution.

Introduction: The Double-Edged Sword of Automation

As artificial intelligence (AI) reshapes the insurance landscape, claims professionals face a growing tension between automation and accountability. The size of the global AI-in-insurance market is expected to reach somewhere between $16 billion in 2028 (Statista) and approximately $88 billion by 2030 (Mordor Intelligence). Claims automation is projected to be the fastest-growing segment.

The claims process in property and casualty lines continues to evolve due to automation as well. Drones, image-recognition software, and predictive models now inform damage assessments, reflect coverage, and settlement considerations. Automation can effectively speed up traditional claims processes, but only if the systems are properly configured and closely monitored. Yet there are barriers to more rapid adoption. According to one study, 35% of insurers struggle with legacy systems that aren’t easily integrated with advanced technologies. Additionally, 45% of insurers find implementation costs a barrier to adoption.

While AI can assist in expediting the claims process, it can also pose litigation risk. In the US, lawsuits against UnitedHealth, Cigna, and Humana allege that AI-driven tools denied health claims by over-relying on statistical predictions. Similar accusations have emerged in property insurance, where models were allegedly biased against certain neighborhoods, and racial discrimination was alleged.

Furthermore, without active human oversight, AI systems can inadvertently amplify minor inaccuracies, leading to substantial exposure. When AI is used to aggregate data, expert review remains essential. Every detail must be verified; AI may assist with initial data processing, but ultimately, expert involvement is non-negotiable.

For insurers, this automation paradox is particularly acute. Efficiency gains are real: AI can process claim data in seconds, but the lack of explainability makes it difficult to defend those outcomes in court. A risk is in the black box effect. You put in data, and it gives output. If you have no idea what’s going on inside the box, you are merely trusting that what it gave you is right. The practitioner must be able to validate the results. The person whose name is on a report, or the person who is giving testimony about their findings, is ultimately responsible.

The problem with the use of black-box algorithms in high-stakes decisions underscores why many insurers are cautious and primarily utilize AI for administrative tasks and early triage, while retaining human adjusters for crucial determinations.

Smarter, Faster Claims Processing

AI offers unmatched speed in document review and triage; combined with drones and real-time imaging, these tools enable insurers to respond to catastrophic events in a matter of days. In property claims, models trained on historical loss data can instantly compare damage photos, identify policy triggers, and recommend the next steps. By accelerating these tasks, AI enables insurers to scale claims operations more efficiently, helping to reduce their overhead, streamline resolution timelines, and alleviate the manual burden of sorting, coding, and summarizing data.

AI-enabled tools may help resolve complex claims more efficiently. For more routine claims, AI combined with the Internet of Things (IoT) offers more predictive and monitoring technology for insurers to handle claims. A claim can be automatically triggered and processed without the consumer having to file one, saving time and expense for everyone. Some recent examples include:

- One reinsurance company has explored a Flight Delay Compensation tool. Built on a model that responds to flight delays, this parametric insurance product allows customers who purchase a ticket from an airline or online travel agency to receive claim payments automatically when delays are confirmed, eliminating the need to file a claim.

- Another insurer is using AI to accelerate claims processing during extreme weather events. Its system automates high-volume, low-complexity claims, such as food spoilage, thereby reducing turnaround times from days to hours. AI agents handle coverage checks and fraud detection, while experienced professionals make the final payout decisions.

Strengthening Expert Testimony and Litigation Support

AI is also enhancing expert analysis and presentation in litigation. From 3D visualizations of structural failures to rapid keyword searches in discovery, the technology augments, not replaces, human expertise.

Engineers and forensic analysts report using AI for pressure-testing conclusions, identifying logical inconsistencies, and verifying calculations before peer review. In complex cases, AI-assisted modeling can generate reconstructions that clarify cause-and-effect relationships for judges and juries, thereby enhancing their understanding.

Still, experts emphasize a critical caveat: The first question you’ll be asked in deposition is, ‘Were you at the site?’ If the answer is no, your credibility drops instantly. AI cannot substitute for firsthand observation or professional judgment. It is a tool for precision, not a shield from accountability.

As the role of AI in an expert’s opinion expands in litigation, greater scrutiny of that opinion will result. Opposing counsel may challenge the expert’s findings, demanding to know if they are truly derived from the expert’s specialized knowledge or if the expert is relying on a determination by AI. If it is the latter, then it may be disallowed by a court and the expert’s opinion stricken.

Emerging Liability Under Financial Lines

AI exposure extends far beyond claims processing. Corporate misrepresentations of AI capabilities, known as “AI-washing,” are increasingly drawing the attention of regulators, investors, and litigators. Similar to greenwashing accusations of past decades, AI-washing occurs when a company exaggerates its use of or competence in AI systems. In one example, a former CEO of an e-commerce company was indicted by federal prosecutors in New York for making false and misleading claims about his company’s proprietary AI technology and its operational capabilities.

See video on AI washing below:

This presents a new frontier for Directors & Officers and Errors & Omissions liability. Executives who tout unverified AI capabilities may face allegations of misleading shareholders or regulators. Insurers underwriting these policies must evaluate not only the insured’s operational risk but also their public claims about technology.

Conversely, insurers themselves are vulnerable. An AI-driven claims platform that produces inconsistent or discriminatory outcomes could trigger litigation for negligence, breach of contract, or even civil rights violations. Compliance and auditability are no longer optional; rather, they are integral to underwriting integrity.

Ethical Governance and Professional Duty

Ethical responsibilities surrounding AI have raised concerns among some healthcare organizations, including the American Medical Association. The American Hospital Association (AHA) has proposed safeguards for the use of AI by insurers, including transparency that discloses when and how the technology is utilized. The AHA also proposed that the insurer disclose the data for training and that “plain language” denials must clearly indicate whether AI influences the denial and the role of the technology in the denial decision. The AHA also believes that denials must be reviewed by a qualified clinician.

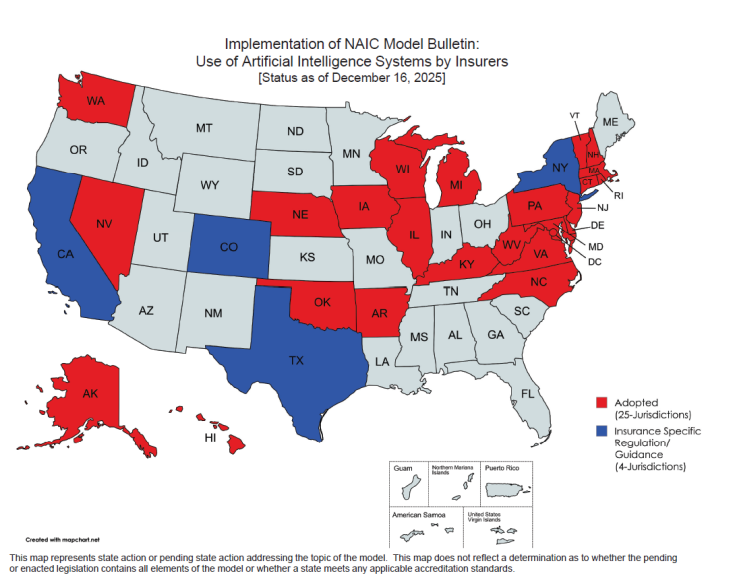

The concerns extend beyond healthcare and have informed actions by the National Association of Insurance Commissioners (NAIC), the US standard-setting and regulatory support organization. The NAIC created a Model Bulletin for the Use of AI Systems by Insurers. Several states have adopted the measures in the bulletin.

See Image 1 below.

The NAIC bulletin notes that insurers are expected to adopt practices, including governance frameworks and risk management protocols, which are designed to ensure that the use of AI Systems does not result in: 1) unfair trade practices…; or 2) unfair claims settlement practices.

Several insurance industry groups, including the National Association of Mutual Insurance Companies, the American Property Casualty Insurance Association, the American InsurTech Council, the American Council of Life Insurers, and the Blue Cross Blue Shield Association, all believe that implementation of the NAIC Model Bulletin as law is premature because there are other tools regulators can use to oversee the use of AI.

However, the Trump Administration issued an end-of-year executive order intent on limiting the role of states in regulating AI. In December 2025, the Administration announced an executive order that directs the Attorney General to establish an AI Litigation Task Force to challenge state AI laws that it deems harmful to innovation and create costly compliance requirements. The order specifically calls out California’s and Colorado’s laws. The executive order highlights the Colorado AI Act’s prohibition on “algorithmic discrimination” as an example of harmful state overreach, arguing that such provisions compel companies to embed ideological bias and generate false results. California will arguably feel the greatest impact from the order, since it has enacted more AI laws than any other state.

The executive order was met with concern by NAIC. In a statement, NAIC said the executive order “creates significant unintended consequences… [that] could implicate routine analytical tools insurers use every day and prevent regulators from addressing risks in areas like rate setting, underwriting, and claims processing—even when no true AI is involved.”

Meanwhile, regulators in several other countries are taking the use of AI in the industry just as seriously. On August 2, 2026, the European Union’s AI Act, which establishes a comprehensive framework for artificial intelligence, will come into full effect. The Act takes a risk-based approach, noting four risk-based categories: prohibited systems; high-risk systems, systems that mandate transparency reporting, and general-purpose AI systems. Where this Act affects insurers is in the prohibited systems. In this category, insurers that utilize AI systems for risk assessment and pricing of life and health insurance may be subject to penalties.

The Deepfake and Fraud Frontier

Generative AI and its capacity for detecting patterns inconsistent with legitimate claims are rapidly expanding the scope of uncovering potential fraud. From fabricated invoices to doctored site photos, AI makes it possible for anyone to manufacture evidence that looks authentic. This evolution complicates the verification of documentation, particularly in large catastrophe or surety claims.

For global insurers facing multi-jurisdictional regulations, AI-driven compliance monitoring can flag inconsistencies across subsidiaries and partners. Combined with digital forensics and eDiscovery, these systems can reduce exposure to cross-border fraud, sanctions violations, and anti-money laundering breaches, aligning operational efficiency with ethical governance. In addition, this technology can be deployed defensively. Investigation teams already utilize AI-powered image and metadata verification to determine authenticity. As the volume of synthetic content grows, digital forensics will become a standard component of claims review. The integrity of evidence, and the trust it underpins, will increasingly depend on who has the better algorithms and governance protocols.

Conclusion: Ethical Leadership as Market Differentiator

As AI automation becomes increasingly prevalent in the insurance industry, transparency alone is not enough; responsible governance and expert oversight are also essential. Insurers that consistently demonstrate the responsible use of AI, disclose model use, validate fairness, and communicate openly will distinguish themselves in the marketplace.

By combining forensic accounting, digital forensics, engineering, and behavioral science, insurers can gain a deeper understanding of the technical and ethical aspects of AI adoption. This not only strengthens internal decision-making but also enhances auditability.

When one of your cases is in need of a construction expert, estimates, insurance appraisal or umpire services in defect or insurance disputes – please call Advise & Consult, Inc. at 888.684.8305, or email experts@adviseandconsult.net.